A platform for versioned, tested skills and context that keep agent behavior correct as code, libraries and AI models change.

LONDON, UK / ACCESS Newswire / February 18, 2026 / As AI agents become a standard part of software development, teams are discovering a new kind of dependency: agent skills and context. Skills encode how agents use APIs, apply engineering practices, and operate inside real codebases. When managed well, they enable consistency, reuse, and scale. When left unmanaged, their effectiveness quickly degrades.

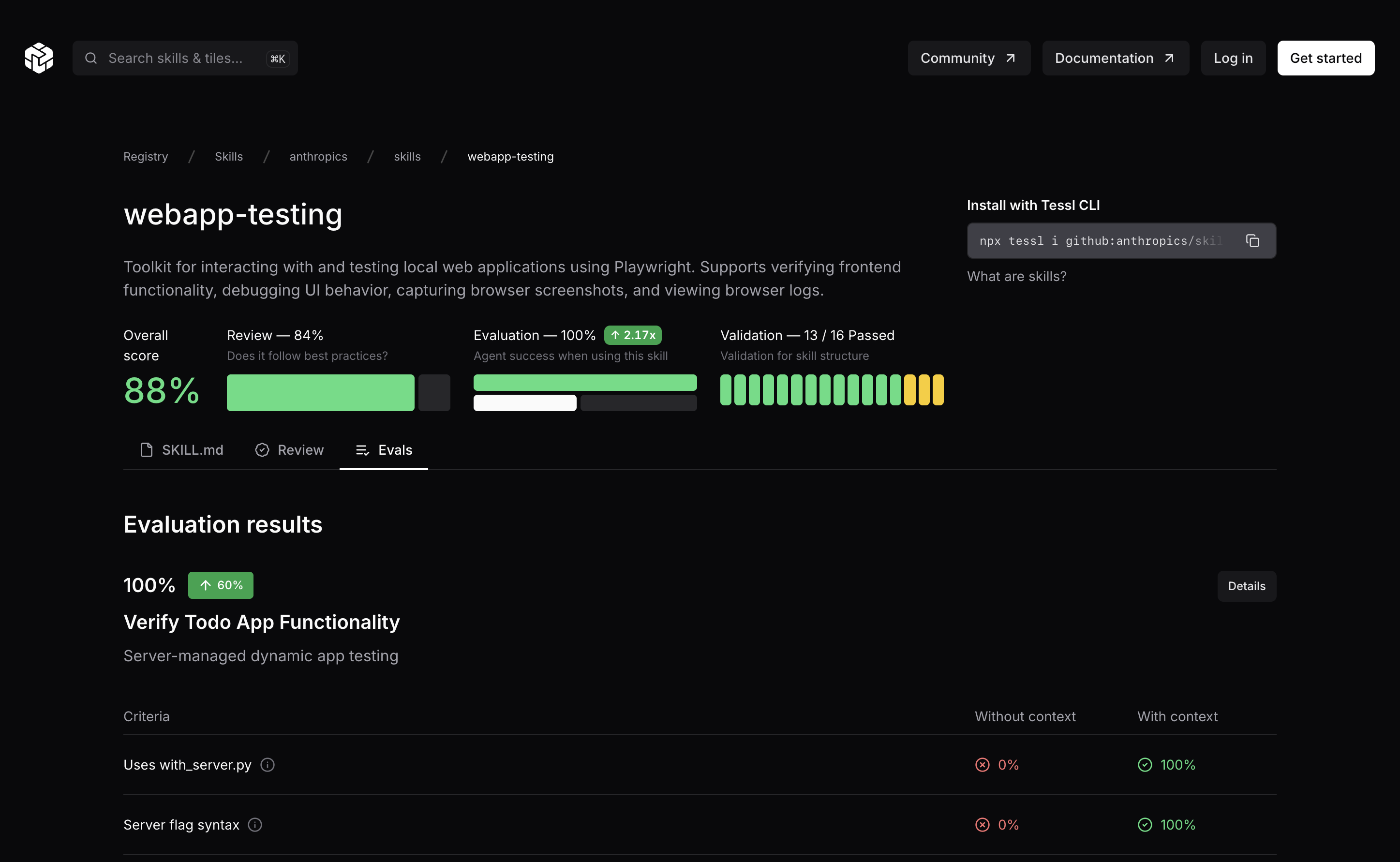

For example, Anthropic's webapp-testing skill scores 100% on its custom benchmark, a 2.2x improvement over uninformed agent use, while their xlsx skill only reaches 77% success and a more modest 38% success gain. ElevenLabs music skill triples agent ability to use those APIs. And yet, many skills fare much worse, offering little value or degrading agent behavior.

Agents don't fail because they are unreliable. Agents depend on context, and that context may be incomplete, mismatched to the task, or simply out of date. Libraries evolve, internal conventions change, and AI models respond differently to the same guidance. A skill that once worked well can gradually lose impact, or behave differently across tools, without any clear signal.

Tessl is built to make skills powerful and durable.

Tessl is a platform for developing, evaluating, and distributing agent skills and context as software: versioned, testable, and continuously improved as systems change.

Skills are becoming the new unit of software

As teams rely more on agents to write, review, and maintain code, skills are increasingly shared across repositories, teams, and ecosystems. They are no longer personal prompts, they are shared dependencies.

In benchmarks, optimized skills improved correct API usage by up to 3.3× across more than 300 open-source libraries, while others had little effect or reduced accuracy. The same guidance can significantly help one agent and have minimal impact on another.

That variability demands engineering rigor - not guesswork.

From prompts to owned skills

Treating skills as software introduces clear requirements.

Skills need ownership. They must be versioned alongside code and dependencies, tested against real tasks, and updated safely as systems evolve. Teams need visibility into which skills are in use, why they exist, and how changes affect agent behavior.

Without this lifecycle, improvements don't compound. Guidance becomes fragmented, fragile, and hard to trust.

Tessl provides a full lifecycle for skills and context: generate, evaluate, optimize, and distribute - turning guidance into reliable infrastructure.

Built for teams who author and maintain skills

This lifecycle is designed for skill authors: platform teams, tool vendors, and engineering organizations responsible for creating and maintaining shared guidance for agents.

With Tessl, authors can:

Develop skills as versioned assets

Measure how those skills perform across agents and models

Optimize guidance based on observed outcomes

Distribute skills with confidence and proof of value

Skill effectiveness becomes observable, and ownership becomes explicit.

Skills are key to help agents use the rapid new ElevenLabs feature stream. Tessl's evals help us ensure our skills work well, so we can keep delivering a top tier developer experience to agentic devs.

Luke Harries, Head of Growth, ElevenLabs

Measuring what works in practice

Vibes do not scale when skills are reused broadly.

Tessl evaluates skills by running agents through representative scenarios with and without a skill applied and measuring the difference in outcomes. This makes it possible to understand whether a skill improves behavior, has no measurable effect, or should be revised - and how its impact changes over time or across models.

This feedback loop allows producers to continuously improve skills, and consumers to trust the guidance they install.

A package manager for agent skills

Package managers made software scalable by making dependencies explicit, versioned, and reusable. Agent skills face the same challenges - and require the same discipline.

Tessl applies this model to agent skills and context, enabling teams to move from ad-hoc experimentation to long-lived, production systems built on shared, tested guidance

We have tens of thousands of engineers using AI tools daily who need support to shift from prompting to context engineering. I believe Tessl's approach to measure, package, and distribute that context automatically is the solution that can unlock agent productivity.

John Groetzinger, Principal Engineer at Cisco

Built for agentic developer workflows

Tessl works with agent-based development environments such as Claude Code, Cursor, Gemini, and others. Skills are agent and model-agnostic, allowing teams to maintain consistent behavior across tools without lock-in.

By packaging APIs, libraries, and engineering practices as evaluated skills, teams onboard agents using the same principles they apply to onboarding developers: shared expectations, clear guidance, and continuous improvement.

Availability

Tessl is available today and free to use.

Developers can get started using the Tessl CLI and explore, install, evaluate or publish skills via the Tessl Registry. Visit the docs to get started.

About Tessl

Tessl builds tools for managing agent skills and context in professional software teams. By enabling versioned, evaluated, and continuously improving skills, Tessl helps developers and skill producers ship reliable AI-generated code with confidence.

Tessl was founded by Guy Podjarny, former founder and CEO of Snyk, and is backed by leading investors in developer infrastructure.

Website: https://tessl.io

Press inquiries: Melanie Brown, Head of Marketing (melanie@tessl.io)

SOURCE: Tessl AI Ltd

View the original press release on ACCESS Newswire